| Issue |

Int. J. Metrol. Qual. Eng.

Volume 15, 2024

|

|

|---|---|---|

| Article Number | 6 | |

| Number of page(s) | 30 | |

| DOI | https://doi.org/10.1051/ijmqe/2024003 | |

| Published online | 25 April 2024 | |

Research article

Application of maximum statistical entropy in formulating a non-gaussian probability density function in flow uncertainty analysis with prior measurement knowledge

Department of Mechanical, Bioresources and Biomedical Engineering, University of South Africa, Private Bag X6, Florida 1710, South Africa

* Corresponding author: ramnav@unisa.ac.za

Received:

24

October

2023

Accepted:

27

February

2024

In mechanical, civil and chemical engineering systems the accuracies of flow measurement instruments is conventionally specified by certified measurement capabilities (CMCs) that are symmetric, however it is physically possible for some flow instruments and equipment to exhibit asymmetric non-Gaussian behaviour. In this paper the influence of non-Gaussian uncertainties is investigated using direct Monte Carlo simulations to construct a probability density function (PDF) using representative non-Gaussian surface roughness data for a commercial steel pipe friction factor. Actual PDF results are compared and contrasted with a symmetric Gaussian PDF, and reveal inconsistencies in the statistical distributions that cannot be neglected in high accuracy flow measurements. The non-Gaussian PDF is visualized with a kernel density estimate (KDE) scheme to infer an initial qualitative shape of the actual PDF using the approximate locations of the normalized peaks as a initial metrologist estimate of the measurement density. This is then utilized as inputs in a maximum statistical entropy functional to optimize the actual non-Gaussian PDF using a nonlinear optimization of Lagrange multipliers for a mathematically unique PDE. Novelties in the present study is that a new methodology has been developed for statistical sampling from non-monotonic non-Gaussian distributions with accompanying Python and Matlab/GNU Octave computer codes, and a new methodology for utilizing metrologist's expert prior knowledge of PDF peaks and locations for constructing an a priori estimate of the shape of unknown density have been incorporated into the maximum statistical entropy nonlinear optimization problem for a faster and more efficient approach for generating statistical information and insights in constructing high accuracy non-Gaussian PDFs of real world messy engineering measurements.

Key words: Pipe flow friction uncertainty / Monte Carlo / non-Gaussian / statistical entropy / optimization

© V. Ramnath, Published by EDP Sciences, 2024

This is an Open Access article distributed under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This is an Open Access article distributed under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

1 Introduction

1.1 Research motivation

In mechanical, civil and chemical engineering systems the accuracies of flow measurement instruments is conventionally specified by certified measurement capabilities (CMCs) that are symmetric, and are typically modelled as Gaussian, rectangular or Student's t-distributions. For most practical industrial problems knowledge of an expected value µ and an estimate of an equivalent standard deviation σ, usually estimated via a standard uncertainty σ = u(x), is sufficient to model the statistical distribution via an appropriate symmetric Probability Density Function (PDF), with the use of the Kline and McClintock uncertainty analysis technique [1] that is now a standardized engineering uncertainty method.

On the other hand, for contemporary scientific metrology problems in many national metrology institute and commercial calibration laboratories the Guide to the Expression of Uncertainty in Measurement that is commonly simply abbreviated as the GUM [2], offers more measurement accuracy refinements. These refinements occur where a Gaussian PDF is replaced with a Student's t-distribution through an appropriate calculation of an equivalent degrees-of-freedom veff which allows for the “width” of the PDF to be refined.

These refinements are utilized in calibration certificates and laboratory inter-comparisons in order to more accurately incorporate the statistical dispersion of the tails of a Gaussian distribution and to incorporate correlation effects via covariance matrices for nonlinear measurement models as discussed by Ramnath [3] which generalized and extended earlier work by Kang et al. [4] and Ramnath [5] for the special case of linear statistical regression model parameter uncertainties. More advanced correlation models that extend the concept of covariance matrices include the use of parametric and non-parametric models that incorporate linear error sources and non-linear error sources through the use of a squared exponential covariance matrix function as reported by Tang et al. [6]. These more complex higher order correlation models may sometimes be necessary in high accuracy measurement science work when traditional covariance matrices for linearised multi-physics models such as coordinate measuring machines which incorporate a mixture of mechanical, electrical and optical sub-systems as reported by Habibi et al. [7] and Habibi et al. [8] are insufficient. By contrast, when little detailed uncertainty information is available such as simply a best estimate of a range of values x ∈ [a, b] at a confidence level of say 95%, then under these circumstances an appeal is made to maximal statistical entropy arguments by Bretthorst et al. [9]. Under this scheme that was subsequently adopted by the GUM, the approach is to instead simply model the underlying PDF as a rectangular distribution.

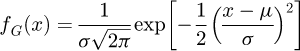

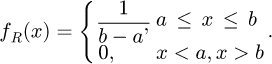

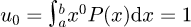

Particular examples according to the GUM for non-Gaussian symmetric PDFs that may be approximately converted to equivalent symmetric Gaussian PDFs include that of a rectangular PDF with a half-width interval a i.e. for x ∈ [− a, b] which may be approximately converted to an equivalent Gaussian PDF by setting  or for a symmetric triangular PDF with a half-width interval of α which may be converted by setting

or for a symmetric triangular PDF with a half-width interval of α which may be converted by setting  such that x ∼ fG(x) = N(0, σ2) with appropriate shifts/translations if the expected value µ is not centred at zero, under the assumption that the underlying PDF is appropriately scaled and normalized such that

such that x ∼ fG(x) = N(0, σ2) with appropriate shifts/translations if the expected value µ is not centred at zero, under the assumption that the underlying PDF is appropriately scaled and normalized such that  . Examples of the application of asymmetric PDFs utilized in recent engineering research work include the application of the Weibull distribution as studied by Kohout [10] in materials testing work and by Liu et al. [11] in rolling bearing life work, and the Rayleigh distribution as studied by Rezaei and Nejad [12] for the effect of wind speed distribution on wind turbine loading and life duration. Technical limitations with the use of Weibull and Rayleigh distributions center on their lack of general flexibility in modelling the extent of the skewness and kurtosis.

. Examples of the application of asymmetric PDFs utilized in recent engineering research work include the application of the Weibull distribution as studied by Kohout [10] in materials testing work and by Liu et al. [11] in rolling bearing life work, and the Rayleigh distribution as studied by Rezaei and Nejad [12] for the effect of wind speed distribution on wind turbine loading and life duration. Technical limitations with the use of Weibull and Rayleigh distributions center on their lack of general flexibility in modelling the extent of the skewness and kurtosis.

The challenge with the above approaches is that it may physically be possible for some instruments, equipment and measurement systems to exhibit non-Gaussian behaviour that cannot be adequately modelled by symmetric Gaussian, Student's t-distribution, rectangular, Weibull or Rayleigh distributions.

This phenomenon has been investigated by Possolo [13] who reported on asymmetrical measurement uncertainties which were encountered in half-life radioactive decays, astronomical measurements, the absorption cross-section of ozone in atmospheric physics, chemical purity measurements, and banking financial inflation forecasting predictions. Rather than “symmetrizing” asymmetric uncertainties, i.e. by fitting a symmetric uncertainty envelop that is sufficiently broad to encompass the asymmetries by “over-estimating” the uncertainty in order to be conservative so that there is a built in safety-factor in the estimate for the measurement uncertainty, Possolo [13] instead investigated and recommended alternatives to directly modelling asymmetries through the use of the Fechner distribution, the skew-normal distribution, and the generalized extreme value (GEV) distribution. A technical limitation with these proposed distributions is they cannot be readily adapted to PDFs that exhibit double or even multiple peaks, and this presents a current research gap in metrology uncertainty analysis work.

At the present time of writing the state of the art in scientific metrology uncertainty encompasses the use of the GUM and the GUM Supplements which may be considered as specific special cases of a more generalized Bayesian statistics uncertainty analysis as reported by Forbes [14]. An early example of such a Bayesian statistics approach applied to a metrology uncertainty problem was reported by Burr et al. [15] who implemented a Bayesian statistics formulation as an alternative to a more commonly utilized Markov Chain Monte Carlo (MCMC) approach for determining the covariance matrix in a least-squares straight line statistical regression. It is anticipated that future revisions of the GUM in the next decade will incorporate a more mathematically rigorous Bayesian statistics theoretical framework for metrologists working at national metrology institutes and national measurement laboratories.

1.2 Research objective

In this paper, the research objective is to perform an investigation to study the influence of asymmetric non-Gaussian PDF uncertainties on flow measurement systems which exhibit multiple peaks of pipe wall surface roughness measurements which cannot be readily modelled through skewed unimodal distributions, and to examine how these effects influences the performance and measurement accuracies of hydraulic frictional factors in pipes in order to address this research gap in flow metrology asymmetric and non-Gaussian uncertainty analysis. An additional research objective, is to develop appropriate mathematical tools that may be utilized to incorporate prior measurement knowledge in optimizing the construction of an asymmetric non-Gaussian PDF.

1.3 Research approach

The research approach utilized in this paper, is to first perform a literature review of the available statistical theory in Section 2.1 to understand the current limitations in non-Gaussian PDFs for measurement uncertainty. Then in Section 2.2 a review of the corresponding literature for the fluid mechanics of pipe friction flow models is conducted to summarize the available level of scientific knowledge.

After the literature review is completed, in Section 3.1 the mathematical justification of how to combine two different data sets of PDFs of experimental measurements using the technique of statistical conflation is outlined for the physical flow measurement problem that is considered in this paper. Then in Section 3.2 the method of maximal statistical entropy is summarized with relevant formulae, which completes the mathematical formation of the research problem.

Numerical simulations are then performed in Section 4.1 to implement a statistical conflation of non-Gaussian pipe surface roughness data sets that synthesizes the data into a single statistically coherent dataset. In Section 4.2 a new statistical sampling method for performing statistical draws from a non-Gaussian PDF is mathematically derived. An algorithm implementation for the new statistical sampling scheme is also developed. The algorithm is implemented with software code written in Python and Matlab/GNU Octave and the software routines are Validated and Verified (V&V'ed). Data from the non-Gaussian pipe surface roughness measurements are then utilized in Section 4.3 as inputs for the Colebrook mathematical model of a pipe friction factor, by sampling from the PDFs and using these statistical samples in Monte Carlo simulations in Section 4.4. The Monte Carlo data is then post-processed with a Kernel Density Estimate (KDE) algorithm to construct the final non-Gaussian PDF of the pipe friction factor. In Section 4.5 a new approach to incorporate a priori knowledge from a metrologist is developed that may then be used as an input into the Maximum Statistical Entropy (MaxEnt) method, to refine the PDF from statistical moments that are calculated from the Monte Carlo based cumulative distribution function (CDF). The MaxEnt method is then implemented with all of the earlier calculation steps in Section 4.6, and the results are analysed where a flowchart summarises the overall methodology.

Finally, in Section 5 the results are discussed, and conclusions are reported. In Section 6, the influences and implications in point form are summarized.

2 Literature review

2.1 Statistical theories

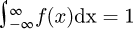

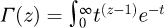

A Gaussian probability density function (PDF) fG(x), a Student's t-distribution PDF fS(x), or a rectangular PDF fR(x) where x is a random variable, µ is an expected value, σ is a standard deviation where σ2 is a corresponding variance, υ is a number of degrees of freedom, and Γ(Z) is the Gamma function defined as  for a complex argument z ∈ ℂ if Real(z) > 0, are all commonly and presently utilized symmetric PDFs that take the following forms:

for a complex argument z ∈ ℂ if Real(z) > 0, are all commonly and presently utilized symmetric PDFs that take the following forms:

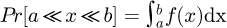

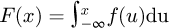

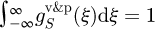

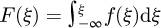

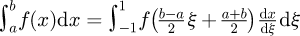

For measurement uncertainty work the PDF will be a convenient tool to perform an analysis, where for a univariate distribution with a random variable x the corresponding PDF f(x) is defined such that  where the cumulative distribution function is

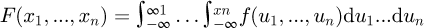

where the cumulative distribution function is  . A multivariate distribution for the joint probability density function for random variables x1, ..., xn in an n-dimensional space ℝn is defined such that Pr[x1, ..., xn ∈ D] = fDf(u1, ..., un)du1...dun where D ⊂ ℝN is a some subset of random variable points within the domain. The corresponding cumulative distribution function (CDF) is defined in terms of the PDF as

. A multivariate distribution for the joint probability density function for random variables x1, ..., xn in an n-dimensional space ℝn is defined such that Pr[x1, ..., xn ∈ D] = fDf(u1, ..., un)du1...dun where D ⊂ ℝN is a some subset of random variable points within the domain. The corresponding cumulative distribution function (CDF) is defined in terms of the PDF as  by standard statistical theory.

by standard statistical theory.

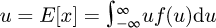

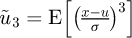

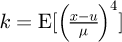

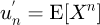

If a PDF is defined then the distribution may be analysed in terms of the expectation  where E[x] denotes a statistical expectation, the variance is σ2 = V[x] = E[(x-μ)2] where σ is a standard deviation, the skewness is

where E[x] denotes a statistical expectation, the variance is σ2 = V[x] = E[(x-μ)2] where σ is a standard deviation, the skewness is  , and finally the kurtosis is

, and finally the kurtosis is  . A majority of algebraic distributions limit the fit to these four parameters as typically only the expectation, variance, skewness and kurtosis have physical meaning in experimental measurements, and to make the analysis more tractable. Additional higher order statistical central moments defined as uk = E[(x − u)k], , which differ from raw non-central moments defined as un = ∫ xxnf(x)dx, are statistically valid but are however not generally considered in existing metrology work since in addition to not typically having any direct physical experimental meaning they are also not generally possible to experimentally measure.

. A majority of algebraic distributions limit the fit to these four parameters as typically only the expectation, variance, skewness and kurtosis have physical meaning in experimental measurements, and to make the analysis more tractable. Additional higher order statistical central moments defined as uk = E[(x − u)k], , which differ from raw non-central moments defined as un = ∫ xxnf(x)dx, are statistically valid but are however not generally considered in existing metrology work since in addition to not typically having any direct physical experimental meaning they are also not generally possible to experimentally measure.

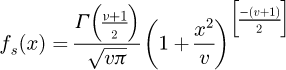

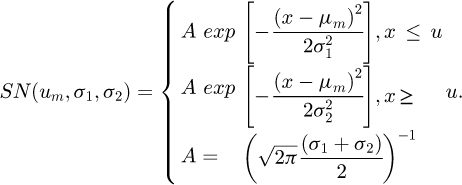

In earlier work by Possolo [13] the use of the Fechner distribution, the skew-normal distribution, and the generalized extreme value (GEV) distribution were proposed as possible distributions to model univariate asymmetries. The Fechner distribution denoted as SN(u, σ1, σ2) is also known as the two-piece normal, binormal or double Gaussian distribution as reported by Wallis [16] and has a PDF of the form

The above distribution has three real parameters, namely a mode value µm (the mode µm is distinct and not equal to the expected value µ), a left-hand-side standard deviation σ1> 0, and a right-hand-side standard deviation σ2 > 0. A multivariate version of the Fechner distribution has been proposed by Villani and Larsson [17] for a p-dimensional random variable x ∈ ℝp which is referred to as a q-split normal distribution SNp(μ, Σ, τ, Q). At the present time of writing there does not exist any generally accepted statistical distributions in analytical form that can conveniently model multiple peaks with varying levels of skewness in metrology uncertainty analysis work beyond these bimodal PDFs which can model only moderate forms of asymmetric skewness, and this presents a research gap in contemporary engineering measurement research.

An alternative more modern skew-normal distribution was originally developed by Azzalini and Capitanio [18] for a k-dimensional random variable z1,…,zk such that z ∼ SNk(Ω, a) where A is a non-singular k×k matrix such that ATΩA is a correlation matrix Σ and a is a vector of parameters. Computations with an Azzalini skew-normal distribution may conveniently be performed with a software library developed by Azzalini [19] in the R statistical computing language. Building on the earlier work of Azzalini and Capitanio a newer form of a multivariate alpha skew Gaussian distribution was subsequently developed and reported by Ara and Louzada [20].

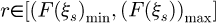

In a different technical approach from the above reported work, asymmetric distributions may also be modelled with extended lambda distributions (ELDs). The use of ELDs in metrology work was originally in a simpler classical form reported by Willink [21] and then in later more powerful forms such as the extended generalized lambda distribution (EGLD) forms as reported by Acar et al. [22], Corlu and Meterelliyoz [23], and Noorian and Ahmadabadi [24] amongst other researchers. Computational statistical libraries such as the EGLD package have been developed by Wang [25], and later a generalised lambda distribution GLDEX package has been developed by Su et al. [26]. Another possibility for modelling asymmetric distributions is to utilize a generalized extreme value (GEV) distribution where the PDF takes the form

In the above formula the set of curves is technically only strictly mathematically valid for  if ξ > 0, and then for

if ξ > 0, and then for  ξ < 0 where the random variable is x and the transformed variable s is defined as

ξ < 0 where the random variable is x and the transformed variable s is defined as  where u is a location parameter which is not equal to the mean and σ is a positive scale parameter. The GEV distribution essentially combines the Gumbel, Frechet and Weibull distribution families into a single synthesized distribution that combines characteristics of these underlying constituent distributions. Further theoretical details for generating GEVs are reported in more technical detail by Muraleedharan et al. [27].

where u is a location parameter which is not equal to the mean and σ is a positive scale parameter. The GEV distribution essentially combines the Gumbel, Frechet and Weibull distribution families into a single synthesized distribution that combines characteristics of these underlying constituent distributions. Further theoretical details for generating GEVs are reported in more technical detail by Muraleedharan et al. [27].

Although Fechner, skew-normal families of varying configurations, ELDs, EGLDs, GLDs and GEVs distributions of varying complexities all have their respective merits for modelling asymmetric distributions, a key technical limitation is that all of these approaches inclusive of even newer ones such as generalized Marshal-Olkin transformation based distributions reported by Klakattawi et al. [28], are all fundamentally restricted to uni-modal single peak distributions and cannot incorporate multiple peaks when fitting an underlying statistical distribution.

If a PDF exhibits multiple peaks then a fit that is only able to incorporate a single peak with varying levels of skewness, would essentially produce a PDF envelope that is sufficiently broad to “over-fit” the underlying PDF, but which would over-estimate the associated uncertainty. This phenomenon of utilizing simplified forms of PDFs has been observed to occur when performing a GUM based uncertainty analysis where the Gaussian or Student's t-distribution for the PDF approximation has been observed to be significantly “fatter” when compared to a more accurate Monte Carlo constructed PDF that is more “narrow” to the extent that an over-fitted symmetric PDF that can envelope the actual PDF introduces unrealistic and inaccurate predictions of the actual measurement uncertainty.

In principal, a copula as originally proposed by Possolo [29] may be used to model an arbitrary univariate or multivariate distribution inclusive of non-Gaussian and/or multiple peak behaviour. At the present time of writing, the use of copulas is more widely known and utilized in scientific metrology uncertainty analysis work, than models such as the squared exponential covariance matrix by Tang et al. [6] as discussed earlier, since copulas can theoretically model arbitrary levels of higher order covariance effects with appropriate choices of copulas and marginal distribution functions. Particular examples of the latest research in copula theory, include the use of the empirical beta copula as discussed by Segers et al. [30], and the use of the empirical Bernstein copula and the empirical checkerboard copula as discussed by Lu and Ghosh [31]. This approach of using copulas has previously been applied by Ramnath [32] for bivariate models with weakly non-Gaussian distributions of the form f(x1, x2) = F(x1)G(x2)C(F(x1), G(x2) ; θ) where F(x1) = μ and G(x2) = ν are marginal cumulative distributions which may be modelled with quantile functions (QFs) or with ELDs of varying complexity.

Copulas when used to model PDFs utilize a function c(F(x1), G(x2) ; θ) that is known as a copula density which is calculated as  and where C is the copula function and θ = (θ1, ..., θq) is an appropriate copula parametrization factor based on the choice of a copula family that models the coupling effect between independent random variables, and is usually done from a selection of parametrized families of copulas based on optimizations in standard statistical software packages. As a result for most practical cases when fitting copulas to a PDF the information for the value of q is relatively small and it is common to use a single parameter with q =1 or at most three or four parameters so that θ = (θ1) or θ = (θ1, θ2, θ3) for example, and to retain more complexity in the marginal distributions. A challenge for constructing a wholly analytical approach is to select appropriate parametrizations of the marginal distributions once the optimal copula density is optimized. The use of copulas generally requires a very large number of Monte Carlo simulation events in order to accurately fit an appropriate copula model.

and where C is the copula function and θ = (θ1, ..., θq) is an appropriate copula parametrization factor based on the choice of a copula family that models the coupling effect between independent random variables, and is usually done from a selection of parametrized families of copulas based on optimizations in standard statistical software packages. As a result for most practical cases when fitting copulas to a PDF the information for the value of q is relatively small and it is common to use a single parameter with q =1 or at most three or four parameters so that θ = (θ1) or θ = (θ1, θ2, θ3) for example, and to retain more complexity in the marginal distributions. A challenge for constructing a wholly analytical approach is to select appropriate parametrizations of the marginal distributions once the optimal copula density is optimized. The use of copulas generally requires a very large number of Monte Carlo simulation events in order to accurately fit an appropriate copula model.

Originally the modelling of marginal distributions for univariate distributions was analytically solved through the fitting of extended lambda distributions through the calculation of statistical moments as proposed by Harris et al. [33]. When this approach was investigated it was concluded by Ramnath [32] that this method is sufficiently accurate for weakly non-Gaussian univariate or multivariate marginal distributions that have asymmetries with single peaks. In the particular case where there is a strongly non-Gaussian behaviour, a mixed analytical/numerical approach may be used that incorporates an analytical form for the copula density whilst a pure numerical calculation of the marginal distributions through empirical cumulative distributions for F(x1 and G(x2) may be used.

A proposed approach for a wholly analytical construction of a PDF or a joint PDF to avoid non-parametrized empirical distributions may be achieved by following the earlier work by Harris et al. [34] to utilize a quantile function constructed as a polynomial B-spline function of sufficiently high order and associated number of knots to model the underlying behaviour of either a univariate or marginal distributions with a vector parameter θ that contains the terms for the fitting of a B-spline.

For most practical metrology problems, particularly for high accuracy scientific metrology work in national measurement primary standard scale realizations and commercial industrial calibration laboratories, to achieve a meaningful quality of fit with splines for arbitrarily complicated “messy” functions would typically require anywhere from 100 to 1000 data points, however a fundamental limitation with even a very high order polynomial is that of Runge's phenomenon, which tends to introduce artificial oscillations near the end-points of the interpolated domain. A practical alternative to mitigate against the Runge phenomenon is the use of splines as discussed earlier by Harris [33] who addressed this technical issue with the use of B-splines.

Whilst the use of B-splines as a linear combination of piece-wise basis functions is an improvement on the use of oscillatory polynomials or cubic splines, there may nevertheless still be an excessive number of parameters associated with the spline knot locations and parameters. The earlier work by Ramnath [32] also exhibits a technical limitation in terms of the unimodal single-peak nature of the fitted PDF, even when asymmetry and skewness can be modelled may be modelled with more complex non-Gaussian models such as the Fechner or GEV distributions, and thus a suitable compromise between the number of parameters and an ability to model multiple peaks in distributions is desirable.

If a suitable alternative method could be developed to accommodate multiple possible peaks with a more manageable number of fitted parameters and non-monotonic behaviour is possible, then this approach when adopted with a wholly analytical model for a PDF f(x) where x = (x1, ...xn) may instead be constructed through a set of curves for each of the underlying marginal distributions F1(x1) = μ1, ..., Fn(xn) = μn with independent parametrizations θ1, ..., θn for each of the fitted curve terms. In general the length of θi may or may not be equal to the length of θj for i≠j, and then an analytical copula C(µ;θ) where μ = (μ1, ..., μn) and Θ = (Θ1, ..., Θm) is another vector of parameters of suitable length m, may instead be used to conveniently model the coupling effects which may exhibit asymmetry and multiple peaks.

Whilst a fully analytical model for constructing a univariate PDF or joint PDF for a multivariate distribution may be appealing or desirable, in practical terms this would in general result in an excessive and unnecessary number of parameters. A more practical solution if the underlying statistical data is available through for example Monte Carlo simulations as discussed by Smith et al. [35] and Armstrong [36] when pre-processing the statistical data, is to directly utilize the available numerical data with either an empirical beta copula as discussed by Segers et al. [30] or alternately through a kernel density estimate approach as discussed by O'Brien et al. [37] when post-processing the statistical data. These approaches are conceptually powerful and simple enough to apply for arbitrary distributions including highly non-Gaussian distributions, avoid unnecessarily complicated fitting of equation parameters, have readily available computational implementations, but however all require a large number of Monte Carlo simulation events which is not always feasible in particular types of experiments in some metrology laboratories.

A useful approach when analysing the post-processed data when non-Gaussian behaviour for the PDF is present and appropriately modelled, is to utilize Rosenblatt transformations to decompose the marginal distributions as discussed by Ramnath [38] such as when conditional PDFs must be calculated when performing a measurement uncertainty analysis when some of the PDF inputs are known or constrained.

2.2 Fluid theories

Fluid mechanics problems in engineering utilizes the concept of a friction factor λ in pipe flow which is only mathematically present on the boundary conditions of a fluid/solid interface such as a pipe wall, as internal fluid friction within the fluid is fully mathematically accounted for from knowledge of the fluid dynamic absolute viscosity µ.

Although a microscopic boundary condition such as the surface roughness may be considered to not have a very significant effect on the overall performance of a macroscopic system a recent investigation by Khanjanpour and Javadi [39] who performed Computational Fluid Dynamics (CFD) studies to investigate the effect of surface roughness on a Darrieus Hydro (DH) turbine concluded that a surface rough height variation of up to 1000 µm increased the turbulence and decreased the active fluid energy, to the extent that a turbine's overall drag coefficient was 20% higher than a smooth turbine blade with a zero roughness height. This study thus demonstrated that microscopic surface roughness effects can have significant macroscopic equipment and instrument consequences in flow measurement equipment accuracy, particularly in metrology pressure and flow systems where laboratory instrument accuracies must typically range from a fraction of a percent to several parts-per-million. A recent review by Kadivar et al. [40] reports on the current state of experimental and theoretical knowledge for fluid flows over general rough surfaces, and it is anticipated that Direct Numerical Simulation (DNS) work will become increasingly common in CFD based simulation studies of surface roughness effects in pipes.

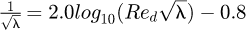

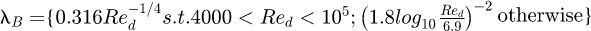

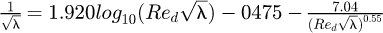

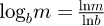

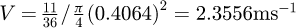

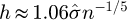

In the special case of pipe flows Ludwig Prandtl, widely considered to be the father of modern aerodynamics, originally performed a mathematical analysis to account for friction data in terms of an explicit equation of the form  where

where  is the Reynolds number for a pipe with a hydraulic diameter of d, V is the pipe bulk flow velocity,

is the Reynolds number for a pipe with a hydraulic diameter of d, V is the pipe bulk flow velocity,  is the fluid kinematic viscosity, µ is the fluid dynamic viscosity, and ρ is the fluid mass density. An approximate alternative to the implicit form for λ includes the well known Blasius equation

is the fluid kinematic viscosity, µ is the fluid dynamic viscosity, and ρ is the fluid mass density. An approximate alternative to the implicit form for λ includes the well known Blasius equation  which may be considered as an approximation to the more accurate Prandtl equation for a more limited range of Reynolds numbers.

which may be considered as an approximation to the more accurate Prandtl equation for a more limited range of Reynolds numbers.

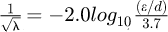

Experimental work by Charles-Augustin de Coulomb discovered that the pipe wall surface roughness directly effected the value of the friction however this effect is considered negligible for laminar pipe flow when the Reynolds number is relatively small. At higher Reynolds numbers with rough surfaces for a fully rough flow the friction equation developed by Nikuradse takes the form  where ϵ is representative wall roughness height and (ϵ/d) is known as the dimensionless roughness ratio.

where ϵ is representative wall roughness height and (ϵ/d) is known as the dimensionless roughness ratio.

The physical difference between a fully rough regime and a transitionally rough regime is essentially dependent on the prevailing Reynolds number. For low Reynolds numbers where the fluid velocity is small the effect of the viscosity is more dominant and will tend to damp out disturbances caused by the localized surface roughness and this flow regime is categorized as hydraulically smooth. On the other hand, as the fluid velocity increases then so to does the Reynolds number, and with this flow regime the induced turbulent eddies near the pipe surface are not fully damped by the viscosity, and so the drag caused by the shape of the irregularities on the surface tends to contribute more to the overall drag. At a certain value of Reynolds number the drag becomes dominant and this flow regime is referred to as fully rough. Thus a pipe flow regime varies from hydraulically smooth, to transitionally rough to fully rough, and this is correlated to the prevailing Reynolds number.

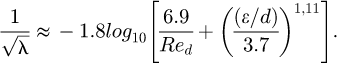

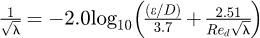

These earlier historical fluid mechanics experimental studies were subsequently utilized by Colebrook [41] to cover the transitionally rough range by combining the hydraulically smooth wall model of Prandtl and the fully rough model of Nikuradse into a single interpolation formula for the pipe friction in the transitional rough regime of the form

The above implicit non-linear Colebrook formula for the pipe friction λ where ϵ is also known as an equivalent sand grain roughness corresponding to the diameter of a grain of sand, is now generally considered to be the accepted universal engineering design formula to directly compute the turbulent friction factor in a pipe with appropriate numerical routines implemented in software packages such as Fortran or Matlab.

Different schemes exist that attempt to correlate the equivalent sand grain roughness height ϵ, also written as ks to signify the Nikuradse sand grain size, with the surface roughness height statistical data as shown in Figure 1. One particular algorithm to estimate the equivalent sand grain roughness developed by Adams et al. [42] is  .

.

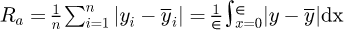

Alternative schemes reported by Shockling et al. [43] for the arithmetic average is  and for the root-mean-square (RMS) is

and for the root-mean-square (RMS) is  , where

, where  is the fluctuation which may be positive or negative of the profile surface roughness height relative to the mean height

is the fluctuation which may be positive or negative of the profile surface roughness height relative to the mean height  of the pipe wall corresponding either to a ‘peak’ or ‘valley’, and where L is a suitable interval of measurement to provide a reasonable estimate of the surface roughness properties. Typical spot sizes for surface roughness measurements in this study on selected regions on the pipe wall are 0.8mm×1.2mm, and typical values for the arithmetic and RMS averages for steel pipes are Ra ≈ 0.116μm and Rq ≈ 0.15μm.

of the pipe wall corresponding either to a ‘peak’ or ‘valley’, and where L is a suitable interval of measurement to provide a reasonable estimate of the surface roughness properties. Typical spot sizes for surface roughness measurements in this study on selected regions on the pipe wall are 0.8mm×1.2mm, and typical values for the arithmetic and RMS averages for steel pipes are Ra ≈ 0.116μm and Rq ≈ 0.15μm.

The application of the above formula for Ra by Adams et al, or alternative competing schemes, to implement the algorithm assumes that xy profile data from longitudinal x scans of the surface height y along the surface are available, such that for a small interval along the surface the arithmetic average of absolute values Ra is calculated, where  is the mean line of the height and

is the mean line of the height and  is the difference between a measured point on the surface and the mean line, and the corresponding value of the equivalent sand grain roughness ∊ is solved from the integral equation. In this manner the value of ϵ may be conveniently calculated from arbitrary surface roughness xy profile measurements of the pipe surface. At the present time of writing there does not exist a unique universally accepted method to convert geometrical roughness measurement quantities such as Ra or Rq into an equivalent Nikuradse sand grain size ϵ=ks, although recommendations such as that by Hama [44] propose that for machined surfaces with an approximately Gaussian variation in roughness that ks ≈ 5krms whilst more recent studies by Zagarola and Smit [45] suggest that for honed and polished surfaces that ks ≈ 3krms.

is the difference between a measured point on the surface and the mean line, and the corresponding value of the equivalent sand grain roughness ∊ is solved from the integral equation. In this manner the value of ϵ may be conveniently calculated from arbitrary surface roughness xy profile measurements of the pipe surface. At the present time of writing there does not exist a unique universally accepted method to convert geometrical roughness measurement quantities such as Ra or Rq into an equivalent Nikuradse sand grain size ϵ=ks, although recommendations such as that by Hama [44] propose that for machined surfaces with an approximately Gaussian variation in roughness that ks ≈ 5krms whilst more recent studies by Zagarola and Smit [45] suggest that for honed and polished surfaces that ks ≈ 3krms.

In the original study by Colebrook [41] for the data with transitional roughness the equation took the form  where ks is the equivalent Nikuradse sand-grain roughness value for the pipe inner wall surface and λ is the friction factor that is defined in terms of the pipe flow pressure gradient

where ks is the equivalent Nikuradse sand-grain roughness value for the pipe inner wall surface and λ is the friction factor that is defined in terms of the pipe flow pressure gradient  the pipe inner diameter D, the fluid mass density ρ, and the center-line bulk velocity

the pipe inner diameter D, the fluid mass density ρ, and the center-line bulk velocity  as

as  .

.

A graphical alternative to the Colebrook equation is the well known Moody chart which is accurate to approximately ±15% and is commonly used in many engineering design studies for initial scoping designs for both circular as well as non-circular pipe flows. An alternative explicit formula developed by Haaland [46] which has an accuracy of ±2% approximates the implicit Colebrooke equation as

Once the pipe friction factor λ has been determined the subsequent analysis of pipe networks flows may be performed based on the work of Jeppson [47] as a branch of hydraulic engineering at the interface of mechanical, civil and chemical engineering. These calculations are typically performed by using knowledge of λ to calculate the equivalent head loss hf in meters corresponding to a pipe pressure drop caused by the pipe friction factor expressed as a column of water for the length L of the pipe using the formula 1.

When the surface roughness of a pipe has a known PDF for the value of ϵ as pϵ(ξ) then this uncertainty information may be propagated into the measurement model for λ in order to produce a corresponding PDF gλ(η) that models the uncertainty variation of the pipe friction factor, which may be Gaussian or possibly non-Gaussian, and which in turn may then be used to calculate the uncertainty in the equivalent head loss hf with a PDF ghf(ξ), that can then finally be used in quality engineering studies for investigating the reliability of pipe flow network systems.

Subsequent fluids research work by McKeon et al. [48] investigated fully developed turbulent pipe flow data in the range 31 × 103 ≤ Red ≤ 35 × 106 and utilized a generalized logarithmic law in order to combine the high Reynolds flow data with earlier low Reynolds flow data in the Blasius equation in order to construct a new equation for the friction that is accurate to ±1.4% for the entire low/high Reynolds flow data and which agrees with the Blasius model at low Reynolds numbers to within ±2.0%, where the new equation for the friction term λ= f is accurate to ±0.002 and takes the form of an implicit non-linear equation  .

.

Shockling et al. [43] studied the effects of roughness for turbulent pipe flow for fully developed flow with experimental data in the high Reynolds range 51 × 103 ≤ Red ≤ 21 × 106 and concluded that the traditional Moody diagram for the friction is inaccurate in the transitionally rough regime and should be used with caution. Their investigation demonstrated that in the transitionally rough regime that the friction factor follows a Nikuradse type of inflectional relationship rather than the monotonic Colebrook type of behaviour based on a Buckingham π dimensional analysis which proved that the friction factor follows a functional relationship such that  where π1 is an unknown functional that must be determined. Later experimental work by Allen et al. [49] with Reynolds data for 57 × 103 ≤ Red ≤ 21 × 106 confirmed an inflectional relationship for the friction factor instead of the expected monotonic behaviour predicted from the traditional Moody diagram and which validated the Townsend outer-layer similarity hypothesis for rough walled pipe flows.

where π1 is an unknown functional that must be determined. Later experimental work by Allen et al. [49] with Reynolds data for 57 × 103 ≤ Red ≤ 21 × 106 confirmed an inflectional relationship for the friction factor instead of the expected monotonic behaviour predicted from the traditional Moody diagram and which validated the Townsend outer-layer similarity hypothesis for rough walled pipe flows.

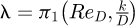

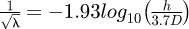

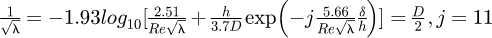

Afzal [50] analysed friction factor data from the available literature and investigated the influence of roughness parameters including the arithmetic mean roughness Ra, the roughness mean peak to valley height Rz, the root-mean-square (RMS) value of roughness Rq, the ratio Rq/H where H is the surface texture Hurst parameter, and h is the traditional equivalent sand grain roughness value. This study concluded that the pipe friction factor λ can be modelled with the Prandtl smooth pipe friction factor equation provided that the traditional Reynolds number Re is instead replaced by a new roughness Reynolds number defined as  where ϕ is a new non-dimensional roughness scale. Different types of formulae were found to be necessary for various roughness scales and may be summarized in terms of the equivalent sand grain roughness h for fully rough and transitionally rough pipes as

where ϕ is a new non-dimensional roughness scale. Different types of formulae were found to be necessary for various roughness scales and may be summarized in terms of the equivalent sand grain roughness h for fully rough and transitionally rough pipes as  for fully rough and

for fully rough and  for transitional rough flows.

for transitional rough flows.

The above fluid theories for modelling the pipe friction factor may then be incorporated into Computational Fluid Dynamics (CFD) based simulation studies of flow measurement equipment and instruments such as that by Wang et al. [51] who investigated an elbow flow meter, and that by Gace [52] who investigated a Coriolis Mass Flowmeter (CFM), where the friction factor λ affects the CFD boundary conditions. These CFD studies would incorporate reference liquid density measurements of actual oils and associated working fluids that have measurement traceability back to an appropriate national metrology institute as discussed by Akcadag and Sariyerli [53].

|

Fig. 1 Geometry of pipe inner wall surface roughness measurements scheme illustrating positive amplitudes in peaks and negative amplitudes in valleys in Nikuradse equivalent grain size diameter experiments. |

3 Mathematical modelling

3.1 Synthesizing Independent PDFs

Techniques for mathematically combining independent measurements that are modelled with PDFs as continuous distributions is an emerging area of metrology research with the increased adoption of the Monte Carlo based uncertainty approach of the GUM Supplement 1 [54] for univariate measurements and the GUM Supplement 2 [55] for multivariate measurements. At the present time of writing there is no widely accepted methodology within the metrology field to uniquely combine independent PDFs of measurement distributions as the existing techniques are orientated for combining discrete measurements.

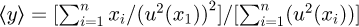

Particular methods for combining independent discrete measurements include the use of weighted arithmetic averages in terms of Graybill-Deal statistical estimators for a sequence of independent measurements {x1, x2, ..., xn} of a measurement y where the expected value of y is then calculated as  with an estimated uncertainty u(y) calculated as

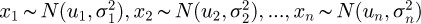

with an estimated uncertainty u(y) calculated as  as discussed by Ramnath [56] for experiments. An alternative is the application of the standard methodology for computing a key comparison reference value (KCRV) from multiple independent laboratory measurements as reported by Cox [57]. The existing methods for combining discrete measurements are only technically valid in the special case that each of the individual estimates independent measurements {x1, x2, ..., xn} follow an underlying Gaussian distribution i.e.

as discussed by Ramnath [56] for experiments. An alternative is the application of the standard methodology for computing a key comparison reference value (KCRV) from multiple independent laboratory measurements as reported by Cox [57]. The existing methods for combining discrete measurements are only technically valid in the special case that each of the individual estimates independent measurements {x1, x2, ..., xn} follow an underlying Gaussian distribution i.e.  or equivalently can be reasonably approximated with a Gaussian distribution. If the underlying discrete measurements cannot be approximated with Gaussian distributions then weighted means will produce inconsistent and statistically inaccurate estimates of the consensus value of x and its associated uncertainty µ(x). Whilst individual laboratories at various national metrology institutes in their primary and working standards may utilize the GUM supplements to generate an accurate prediction of the measurement PDF, these calculations at present have to be approximated as Gaussian PDFs in order to utilize this uncertainty information in inter-laboratory comparisons.

or equivalently can be reasonably approximated with a Gaussian distribution. If the underlying discrete measurements cannot be approximated with Gaussian distributions then weighted means will produce inconsistent and statistically inaccurate estimates of the consensus value of x and its associated uncertainty µ(x). Whilst individual laboratories at various national metrology institutes in their primary and working standards may utilize the GUM supplements to generate an accurate prediction of the measurement PDF, these calculations at present have to be approximated as Gaussian PDFs in order to utilize this uncertainty information in inter-laboratory comparisons.

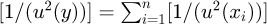

Current research by Willink [58] for combining two independent PDFs has investigated the subtle inconsistencies from a Bayesian statistics framework that may potentially arise when combining two independent PDF estimates of a single quantity. To illustrate the logical inconsistencies which may arise if for example two PDFs p1(x) and p2(x) representing independent sets of information about an unknown quantity are available, then this knowledge may be synthesized and combined into a single unique PDF for the unknown x as  . This formula is a special case where the measurand is given by the linear equation y = x and the probability of the measurement output is the same as the model input so that formally py(η) ∼ gx(ξ) using standard metrology uncertainty analysis from the GUM. It is known to be mathematically correct and consistent in the special case where there are two or more independent PDFs that directly specify knowledge for a single quantity x that does not have any significant functional dependencies on other additional measurement quantities and may therefore be directly utilized to correctly combine the surface roughness PDFs later in this paper.

. This formula is a special case where the measurand is given by the linear equation y = x and the probability of the measurement output is the same as the model input so that formally py(η) ∼ gx(ξ) using standard metrology uncertainty analysis from the GUM. It is known to be mathematically correct and consistent in the special case where there are two or more independent PDFs that directly specify knowledge for a single quantity x that does not have any significant functional dependencies on other additional measurement quantities and may therefore be directly utilized to correctly combine the surface roughness PDFs later in this paper.

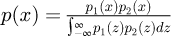

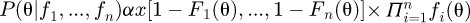

Clemen and Winkler [59] report on an interesting possible use of copulas for combining expert knowledge of a parameter θ by synthesizing the independent PDFs fi(θ) with corresponding CDFs Fi(θ) so that the posterior distribution is  . In this above equation θ(x) denotes some parameter which depends on x, and c[u1, ..., un], where u1 = 1 − F1(θ), ..., un = 1 − Fn(θ) denotes the copula density function outlined in an earlier metrology study by Ramnath [32]. Clemen & Winkler report that a potential benefit of the use of copulas for synthesizing independent expert judgements is that the individual expert judgements for the final parameter θ are entirely separate from any underlying dependence characteristics which are made separately and directly encoded into the copula density function.

. In this above equation θ(x) denotes some parameter which depends on x, and c[u1, ..., un], where u1 = 1 − F1(θ), ..., un = 1 − Fn(θ) denotes the copula density function outlined in an earlier metrology study by Ramnath [32]. Clemen & Winkler report that a potential benefit of the use of copulas for synthesizing independent expert judgements is that the individual expert judgements for the final parameter θ are entirely separate from any underlying dependence characteristics which are made separately and directly encoded into the copula density function.

3.2 Maximum statistical entropy

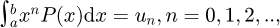

The original formulation of the principle of maximum statistical entropy to the PDF problem was by Mead and Papanicolaou [60] who utilized the fundamental equation  , with n ∈ ℕ that links a univariate PDF P(x) where x. is a random variable to the statistical raw moments

, with n ∈ ℕ that links a univariate PDF P(x) where x. is a random variable to the statistical raw moments  . Later work by Bretthorst [9] revisited the original problem by comparing the methods of binned histograms in multiple dimensions, kernel density estimation, and the method of maximum entropy of moments in an earlier attempt to synthesis or combine the two different competing approaches of the maximum entropy (MaxEnt) and the Bayesian statistics formulation for determining the optimal probability density function.

. Later work by Bretthorst [9] revisited the original problem by comparing the methods of binned histograms in multiple dimensions, kernel density estimation, and the method of maximum entropy of moments in an earlier attempt to synthesis or combine the two different competing approaches of the maximum entropy (MaxEnt) and the Bayesian statistics formulation for determining the optimal probability density function.

More recent work by Armstrong et al. [36] has succeeded in developing a more advanced mathematical new hybrid method that combines the maximum entropy and Bayesian statistics competing approaches for estimating the optimal PDF in the particular case where there is limited knowledge of the statistical moments or when there is noisy data that pollutes the accuracy of the statistical moments. This newer method, simply termed the MaxEnt/Bayesian approach for brevity, is applicable to large scale real world engineering problems where it is infeasible and in some cases simply impractical with available technology to adequately generate a very large number of Monte Carlo simulation events.

The PDFs generated with the MaxEnt/Bayesian approach can theoretically be refined and made more accurate as newer information on the a priori PDFs becomes available by incorporating the newer measurement uncertainty information into the Bayesian scheme using a Metropolis algorithm with a Markov Chain Monte Carlo strategy (MCMC/Metropolis) as discussed in more technical detail by Armstrong et al. [36].

In the particular area of scientific metrology the measurement uncertainty problems at the present time of writing are typically of a much smaller scale albeit at a significantly higher scientific accuracy level with less statistical noise when compared to measurement problems encountered within industry. As a result the maximum statistical entropy method is generally more appropriate for metrology work at primary scientific standards level than the MaxEnt/Bayesian method. For many representative applications work in mechanical, civil and chemical engineering in various metrology fields it is usually possible to generate a sufficient number of Monte Carlo simulations with modern physical multi-core laptops/workstations or rented virtual cloud computing services such as the Amazon Elastic Compute Cloud (Amazon EC2) service that are amenable to deploying open source codes such as OpenFOAM as discussed by Jahdali et al. [61] that can then be used to accurately calculate a sufficient number of statistical moments.

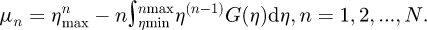

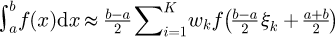

Under these conditions, using the standard calculus integration by parts formula ∫udv = uv − ∫ vdu and noting that the PDF f(x) may equivalently be defined as  with the CDF F(x) it then follows noting that F(xmin) = 0 and F(xmax) = 1 by definition from statistical theory, that the corresponding raw statistical moments μn for a measurement model output random variable η is then just

with the CDF F(x) it then follows noting that F(xmin) = 0 and F(xmax) = 1 by definition from statistical theory, that the corresponding raw statistical moments μn for a measurement model output random variable η is then just

In the above formula the actual CDF F(η) in the definition of the statistical moment µn may be conveniently approximated with an empirical cumulative distribution function (ECDF) that does not require any knowledge of the PDF. The zeroth moment  is automatically known to be unity from standard statistical theory. The GUM Supplement 1 [54] provides a simple piece-wise interpolating formulae for the underlying discrete approximation G(η) with the actual Monte Carlo data Ω.

is automatically known to be unity from standard statistical theory. The GUM Supplement 1 [54] provides a simple piece-wise interpolating formulae for the underlying discrete approximation G(η) with the actual Monte Carlo data Ω.

A useful benefit of computing the statistical moments in terms of the CDF instead of the PDF is that integration is well known by mathematicians to “smooth out” numerical noise and this provides a very convenient and useful numerical strategy to avoid the more difficult problem of calculating derivatives of noisy data encountered later in this paper.

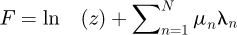

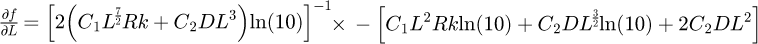

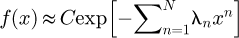

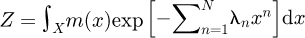

Once the raw statistical moments µn are known, the principle of maximum statistical entropy approaches the determination of the PDF by maximizing the Boltzmann-Shannon entropy function defined as S[f(x)|m(x)] ≔ − ∫ x∈xf(x)ln[f(x)/m(x)]dx where m(x) contributes as an invariant measure. Armstrong et al. [36] report that S[f(x)|m(x)] is actually equal to the negative of the Kullback-Leibler divergence, which means in practical terms that the statistical entropy provides for a mathematical technique to determine the optimal PDF. This is achieved by formulating an equivalent Lagrangian function using the earlier approach of Mead and Papanicolaou [60]. Omitting the theoretical details for brevity, the final result for computing the unknown PDF f(x) is then

In the above formula x is the random variable and X is the space in which x resides, λn are unknown Lagrangian multipliers which must be solved for from knowledge of statistical moments µn, and Z is essentially a type of normalization constant to ensure mathematical and statistical consistency. For a univariate PDF if the limits of x are a ≤ x ≤ b then X=[a,b] is just the closed interval on the real line. Spaces for X which is the domain in which x resides in may be extended to higher dimensions for x ∈ ℝn = ℝ × … × ℝ. Although the “shape” or topology of X in higher dimensional spaces may possibly be irregular in non-Gaussian PDEs in most practical cases X will conservatively be constrained either to correspond to a hyper-ellipsoidal region if the PDF is roughly Gaussian or alternatively to a higher-dimensional rectangular box in ℝn to be conservative if the PDF is non-Gaussian as outlined earlier by Ramnath [62]. The invariant measure m(x) is an initial approximation to f(x) and the MaxEnt approach provides for a way to incorporate the estimate m(x) in a unique equation, where λn are unknown real parameters technically known as Lagrange multipliers which must be solved for. Mead and Papanicolaou [60] mathematically proved that the Lagrange multipliers may be uniquely determined by minimizing the free energy defined as

The presence of the natural logarithm of the partition function term ln(Z) in the above formula necessitates an unconstrained nonlinear optimization in higher dimensional spaces  as the number N of the Lagrange multipliers increases.

as the number N of the Lagrange multipliers increases.

4 Numerical simulations

4.1 Synthesizing non-Gaussian input PDFs

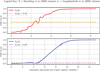

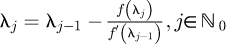

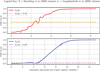

Experimental data for a pipe surface roughness ϵ = ks reported by Shockling et al. [43] and later Langelandsvik et al. [63] both demonstrate a distribution that exhibits multiple distinct peaks as shown in Figure 2 that demonstrate a distinctly non-Gaussian PDF for a pipe friction factor flow measurement uncertainty analysis, which suggests that the pipe friction factor λ may also exhibit a non-Gaussian PDF.

The earlier data by Shockling et al. has a spread of ±8 μm whilst that by Langelandsvik et al. has a spread of ±15 μm. The physical interpretation of a positive value of grain size with ks ≥ 0 is that this value of ks occurs above the mean value of the surface i.e. is a peak amplitude, whilst a negative value of grain size with ks ≤ 0 occurs below the mean value of the surface i.e. is a valley amplitude.

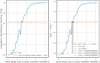

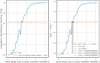

Physically in any fluid pipe flow measurement the equivalent grain size ks will always be non-zero, thus in order to perform an analysis it is therefore necessary to first post-process the bimodal PDF data in order to avoid a negative value of ks. This can be conveniently achieved by taking the absolute value of the surface roughness data so that positive amplitudes measured as peaks above the mean surface remain unaltered whilst negative amplitudes measured as valleys below the mean surface become equivalent positive roughness values. In this manner, two sets of experimental roughness data, one for the roughness amplitudes above the mid-surface and another for the roughness amplitudes below the mid-surface, may be obtained for the pipe surface roughness profiles. This set of experimental datasets can then be synthesized with only the absolute surface roughness value of ks as only positive values of ks is mathematically valid in the Colebrook equation and has a physical meaning in a fluid dynamics analysis for calculating a pipe friction factor λ. Referring to the graph in Figure 2 it may be observed that each of these constituent PDFs are clearly asymmetric distributions and must be combined in a mathematically consistent manner. By taking only the absolute values of the surface data for each of the datasets by Shockling et al. [43] and Langelandsvik et al. [63] the two independent PDFs may be generated as shown in Figure 3.

Letting  and

and  denote the corresponding valley roughness data and peak roughness data for the Shockling et al. dataset, with random variables ξv and ξp for the valley and peak surface roughness variables, it follows that from the previous section that these two individual PDFs may then be combined as

denote the corresponding valley roughness data and peak roughness data for the Shockling et al. dataset, with random variables ξv and ξp for the valley and peak surface roughness variables, it follows that from the previous section that these two individual PDFs may then be combined as  to yield a single combined PDF that obeys the normalization condition

to yield a single combined PDF that obeys the normalization condition  , with a similar expression

, with a similar expression  for the combined Langelandsvik et al. dataset.

for the combined Langelandsvik et al. dataset.

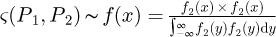

The mathematical notation &(P1,P2) for combining two or more PDFs P1 ∼ f1(ξ), P2 ∼ f2(ξ) into a single PDF is termed conflation following the earlier work by Hill [64] where the conflation is mathematically defined as  This conflation formula by Hill is an earlier special case of the later more general analysis by Willink [58].

This conflation formula by Hill is an earlier special case of the later more general analysis by Willink [58].

|

Fig. 2 PDFs of the inner wall surface roughness for commercial grade steel pipes reported by Shockling et al. [43] and Langelandsvik et al. [63]. |

|

Fig. 3 Comparison of equivalent PDFs of the peak and valley amplitudes reported by Shockling et al. [43] and Langelandsvik et al. [63]. |

4.2 Statistical sampling from a non-Gaussian PDF

For the pipe surface roughness amplitude measurements it may be observed that both of the resulting combined PDFs from the Shockling et al. and Langelandsvik et al. data-sets are both clearly not Gaussian PDFs. It may also be observed that both datasets exhibit asymmetry whilst only the Shockling et al. dataset demonstrates multiple peaks. The corresponding cumulative distribution functions (CDFs) are shown in Figure 3 from which a technical challenge posed by the multiple peaks and skewness may be observed. In a monotonic PDF curve the standard technique to sample from the underlying distribution is to generate a random number between zero and unity that follows a rectangular distribution and to then read off the corresponding random variable ξ from the CDF curve since 0 ≤ F(ξ) ≤ 1 by definition. For symmetric Gaussian PDFs the corresponding CDF is always monotonic in a graph of the CDF for F(ξ) versus ξ data points and it is straightforward to perform an interpolation for monotonically increasing data points.

By contrast the key technical difficulty and technical implementation issue which results due to the combination of asymmetry and multiple peaks in non-Gaussian PDFs, is that a single random number, say 0.43 in the Shockley et al. curve FS(ξ) = 0.43 represented by the dashed orange line, would yield multiple possible values ξ1 ≈ 2.3μm, ξ2 ≈ 2.6μm, ξ3 ≈ 2.8μm of the corresponding random variable ξ that can all technically simultaneously solve the equation F(ξ) = 0.43. A similar non-monotonic behaviour also occurs in the Langelandsvik et curve FL(ξ) = 0.02 represented by the dashed magenta curve where possible solutions are ξ ≈ 2.2μm, ξ ≈ 4.1μm, ξ ≈ 4.6μm. When performing a Monte Carlo simulation an arbitrarily large number of multiple roots would also occur near F(ξs) = 0.43 e.g. 500 random points for 0.42 ≤ F(ξS) ≤ 0.45 and similarly say 250 random points near 0.01 ≤ F(ξL) ≤ 0.03. This non-unique sampling problem would be exacerbated with noisy data with multiple maxima/minima. This is considered to be a unique metrology uncertainty problem which has not been previously encountered, and does not appear to have been previously solved and reported within the available technical and scientific literature.

The key mathematical challenge which arises in sampling from a non-Gaussian cumulative distribution function is therefore the presence of multiple roots for the nonlinear function F(ξ) which is caused by the presence of multiple maxima/minima. In general, a non-Gaussian CDF may exhibit multiple maxima/minima for a corresponding range 0 ≤ F(ξ) ≤ 1 of random variables ξmin ≤ ξ ≤ max and it is not appropriate to “smooth out” these fluctuations as it would cause a sampling bias, noting that there is a fundamental difference in smoothing noisy statistical data and eliminating physically meaningful surface roughness amplitude variations that may be physically caused by peculiarities in machining and surface grinding of metallic pipes and other machined components.

The presence of multiple physically meaningful maxima/minima in the CDF curve causes a horizontal line to intersect the CDF curve multiple times. When a graph of F(ξ) of the vertical/ordinate data versus the ξ horizontal/abscissa data is plotted to determine a corresponding value of the random variable ξ from a specified value of F(ξ) = r this would then generate a non-monotonic curve. Standard numerical interpolations in software such as Matlab and Python all require a single x value and a single y value to make an interpolation in a curve y = f(x) unambiguous otherwise such a numerical routine would automatically fail. If a numerical routine only selects either a first or last value for a y value from a specified x value, from multiple possible values of y, this would then automatically introduce an artificial systematic bias when attempting to sample points from a non-Gaussian distribution. When sampling from a non-Gaussian distribution the x values correspond to F(ξ) which is assumed known and the y values correspond to ξ which is considered unknown and must be inferred. The problem of non-monotonicity is that a single x value allows for multiple y values.

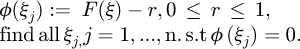

Noting that a random rectangular variable r∼R[0,1] may take an infinite number of possible values, there are then a very large number of possible intersections between a horizontal line with a constant value of r which may cut the curve F(ζ) at varying levels, and that may have several maxima/minima along the axis where the random variable lies. This fundamental statistical problem of sampling from a non-Gaussian distribution in this paper is proposed by mathematically reformulating it as finding all multiple roots for the nonlinear function ϕ(ξ) defined as

A straightforward attempt to solving the above problem by searching for all points where ϕ(ξ)is such that ϕ(ξ)< TOL can provide a rough initial estimate may be achieved by iterating through a discrete set of the pairs  however the specification of the magnitude of the tolerance TOL is a subjective decision and would logically vary on a case by case basis in different measure uncertainty experiments leading to a mathematically ill-posed problem. An incorrect specification of TOL could then unintentionally result in under or over estimating the number of roots i.e. the number of intersections of a curve r=const and F(ξ) that lie in an envelope of possible solutions for ϕ(ξ). If the incorrect number of multiple possible roots is solved by under counting or over counting the roots by an incorrect specification of TOL, then the corresponding set random variables that solve F(ξ) = r would introduce a systematic statistical sampling bias and lead to erroneous measurement uncertainty predictions for non-Gaussian systems.

however the specification of the magnitude of the tolerance TOL is a subjective decision and would logically vary on a case by case basis in different measure uncertainty experiments leading to a mathematically ill-posed problem. An incorrect specification of TOL could then unintentionally result in under or over estimating the number of roots i.e. the number of intersections of a curve r=const and F(ξ) that lie in an envelope of possible solutions for ϕ(ξ). If the incorrect number of multiple possible roots is solved by under counting or over counting the roots by an incorrect specification of TOL, then the corresponding set random variables that solve F(ξ) = r would introduce a systematic statistical sampling bias and lead to erroneous measurement uncertainty predictions for non-Gaussian systems.

This technical complexity of multiple roots in a non-Gaussian sampling is not present in a traditional Gaussian sampling approach where the data is monotonic. If the PDF is symmetric then the CDF would almost always be monotonic unless there is an extreme level of skewness present. Under the assumption of a monotonic data for F(ξ) versus ξ curve it is straightforward to sample in language such as Matlab or GNU Octave with the code fragment r=r and(), xival=interp1(Fdata − r, xidata, 0). This simplifying assumption of monotonic data would not apply for a CDF with multiple peaks, and a new computer sub-routine for numerically interpolating in non-monotonic data curves corresponding to statistical sampling from non-Gaussian distributions must instead be developed.

The lack of monotonicity in the CDF due to the non-Gaussian multiple peak nature of the distribution may theoretically solved by parametrizing the curve into piece-wise smooth segments that have the same gradient sign such that the CDF is the union of these individual sequential segments so that

Referring to the PDF data in Figure 3 which may be post-processed to calculate the CDF in Figure 4 using the standard statistical formula  where ξ is simply a dummy variable for performing the integration, it may be observed that when the gradient of F(ξ) changes from positive to negative a localized maxima occurs, or alternately when the gradient changes from negative to positive that a localized minima occurs. This geometrical change of gradient is the source of the non-monotonicity which would cause a conventional interpolation routine to fail, and suggests a convenient geometrical solution, namely to “simply break up the non-monotonic curve into a sequence of sequential segments which are each individually monotonic” in order to possibly take advantage of existing interpolation routines which work on monotonic data curve.

where ξ is simply a dummy variable for performing the integration, it may be observed that when the gradient of F(ξ) changes from positive to negative a localized maxima occurs, or alternately when the gradient changes from negative to positive that a localized minima occurs. This geometrical change of gradient is the source of the non-monotonicity which would cause a conventional interpolation routine to fail, and suggests a convenient geometrical solution, namely to “simply break up the non-monotonic curve into a sequence of sequential segments which are each individually monotonic” in order to possibly take advantage of existing interpolation routines which work on monotonic data curve.

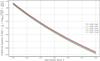

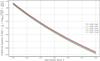

When decomposing a “messy” curve into a sequence of piece-wise smooth curve segments, a simple approach to avoid spurious maxima/minima peaks from noisy oscillations that artificially generate additional peaks is to use a Savitzky-Golay filter to smooth out the signal in the curve as shown in Figure 5 which uses a window length of 11 and a smoothing polynomial of order 5 with a nearest neighbour selection of points on either side to damp out fluctuations for the particular problem of pipe surface roughness and pipe friction factor examined in this paper. The particular parameters for filtering/smoothing signals would in general vary on a case by case basis in other metrology problems.

To sample from the smoothed/filtered non-Gaussian distribution would then involve the generation of a random variable r from a rectangular distribution r ∼ R[0, 1] and then selecting an appropriate segment Sj from the set of all sequential segments S1,S2,…,SN that has a range such that  . In this scheme, it is still technically possible for two or more different segments who may occur in different parts of the domain to each have a range that includes the sampled value r, i.e. it is technically possible to have multiple possible solutions to the equation F(͠ξ)=r in different regions of the domain as shown in Figure 6 which illustrates the fundamental mathematical complexity with non-Gaussian PDFs in a metrology uncertainty analysis.

. In this scheme, it is still technically possible for two or more different segments who may occur in different parts of the domain to each have a range that includes the sampled value r, i.e. it is technically possible to have multiple possible solutions to the equation F(͠ξ)=r in different regions of the domain as shown in Figure 6 which illustrates the fundamental mathematical complexity with non-Gaussian PDFs in a metrology uncertainty analysis.

The numerical strategy in this paper is to perform a sample from all of the possible segments and to then select all of the corresponding feasible value of ξ is considered to be theoretically valid if the sequence of segments are smooth and continuous. Continuity in the case of imperfectly constructed segments for the CDF F(ξ), can be enforced by joining discontinuous segments either with straight lines or with arcs that match the values and slopes of the segments on either side of the discontinuity such that no vertical segments in any discontinuous F(ξ) is present. The need for an absence of vertical segments joining discontinuities in a F(ξ) curve is because the PDF is defined as  and the derivative of a vertical line would be undefined and lead to a mathematically inconsistent PDF.

and the derivative of a vertical line would be undefined and lead to a mathematically inconsistent PDF.

Whilst this approach of decomposing a complicated curve with multiple maxima/minima into a sequence of simpler curves that do not exhibit localized peaks/troughs, avoids an additional sampling bias when performing a Monte Carlo simulation as it is relatively simple to randomly sample from a large number of events, it is more challenging to generate a sequence of random integers if for example 3 possible values ξ1,ξ2,ξ3 can all simultaneously solve F(ξ)=0, j=1,2,3. This situation would occur if a horizontal line cuts across three different segments. Choosing all possible values that solve F(ξj)=0 avoids the unnecessary complexity of generating random selections of integers to select from the available three choices of solutions, and also ensures that the simulation remains physically valid as technically all equivalent sand grain sizes in the roughness amplitude are physically possible.

Practical technical challenges with this proposed approach of partitioning the non-monotonic curve as a sequence of constituent monotonic curves includes that finding the exact point ξ at which the gradient  is zero based on discrete data can be technically challenging, particularly in cases where the slopes are nearly horizontal with zero gradient and which makes it difficult and ambiguous to uniquely estimate the intersection of the segments without accounting for the numerical resolution error.

is zero based on discrete data can be technically challenging, particularly in cases where the slopes are nearly horizontal with zero gradient and which makes it difficult and ambiguous to uniquely estimate the intersection of the segments without accounting for the numerical resolution error.

In addition to the above technical challenges with a theoretical solution of the non-monotonic interpolation problem, from a practical implementation point there may also be a very large number of segments to construct particularly if there are a large number of multiple maxima/minima in the CDF curve. This phenomena is illustrated in Figure 7 where the Shockley et al. PDF previously shown has a total of 14 local minima/maxima peaks for the range of possible random variable ξ.